Summary

Given a simple Ruby project (say this one), how easy is it to set up a GitHub action on the repo so that it runs specs on push? And then say SimpleCov, too?

TL;DR: Skipping the trial and error and the credits (all below), just add this as .github/workflows/run-tests.yml to your repo and push it and you’re away:

name: Run tests

on: [push, pull_request]

jobs:

run-tests:

runs-on: ubuntu-latest

permissions:

contents: read

pull-requests: write

steps:

- uses: actions/checkout@v4

- name: Set up Ruby

uses: ruby/setup-ruby@v1

with:

bundler-cache: true

- name: Run tests

run: bundle exec rspec -f j -o tmp/rspec_results.json -f p

- name: RSpec Report

uses: SonicGarden/rspec-report-action@v2

with:

token: ${{ secrets.GITHUB_TOKEN }}

json-path: tmp/rspec_results.json

if: always()

- name: Report simplecov

uses: aki77/simplecov-report-action@v1

with:

token: ${{ secrets.GITHUB_TOKEN }}

if: always()

- name: Upload simplecov results

uses: actions/upload-artifact@master

with:

name: coverage-report

path: coverage

if: always()

Adding RSpec

I did a search and found Dennis O’Keeffe’s useful article and since I already had a repo I just plugged in his .github/workflows/rspec.yml file:

name: Run RSpec tests

on: [push]

jobs:

run-rspec-tests:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Ruby

uses: ruby/setup-ruby@v1

with:

# Not needed with a .ruby-version file

ruby-version: 2.7

# runs 'bundle install' and caches installed gems automatically

bundler-cache: true

- name: Run tests

run: |

bundle exec rspec

and it just ran, after three slight tweaks:

- My repo already had a

.ruby-versionfile so I could skip lines 11-12 - the

checkout@v2action now gives a deprecation warning so I upped it tocheckout@v4 - because my local machine was a Mac I also needed to run

bundle lock --add-platform x86_64-linuxand push that for it the action to run onubuntu-latest. Unsurprisingly.

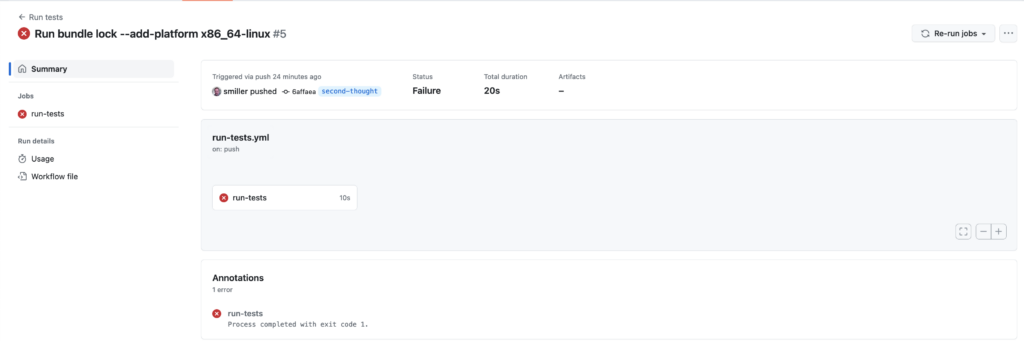

The specs failed, which was unexpected, since they were passing locally, and it would be more informative if it named the specific failure on the summary page instead of needing me to click into run-tests to see it:

So I went to the GitHub Actions Marketplace and searched for “rspec”. There’s a RSpec Report action that looks like it does what we want if we change the bundle exec rspec line to output the results as a json file, so I changed the end of the workflow file to

- name: Run tests

run: bundle exec rspec -f j -o tmp/rspec_results.json -f p

- name: RSpec Report

uses: SonicGarden/rspec-report-action@v2

with:

token: ${{ secrets.GITHUB_TOKEN }}

json-path: tmp/rspec_results.json

if: always()

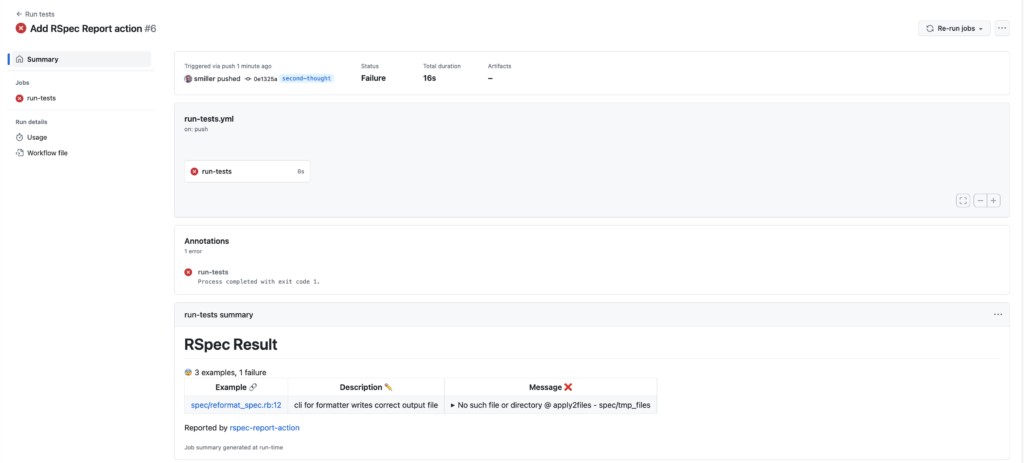

pushed, and tried again, and it failed again, but this time with details:

The failure is not github-action-specific (Digressions on the repo #1: RSpec): the TL;DR is that I had manually created a spec/tmp_files directory for files the tests wrote and because of the way I was doing setup and teardown it would have continued to work on my machine and nobody else’s without my noticing until I erased my local setup or tried it on a new machine. This was a very useful early warning.

Line 22’s if: always() is worth a mention. If running the tests (lines 15-6) exits with failing specs, subsequent blocks won’t run by default. If we need them to run anyway, we need to add if: always(), which is why it’s included here in all subsequent blocks.

Adding SimpleCov

Since the project wasn’t already using SimpleCov, step one was setting that up locally (Digressions on the repo #2: SimpleCov).

That sorted, I went back to the GitHub Actions Marketplace and searched for “simplecov” and started trying alternatives. The currently-highest-starred action, Simplecov Report, drops in neatly after running the specs and the RSpec Report:

- name: Simplecov Report

uses: aki77/simplecov-report-action@v1

with:

token: ${{ secrets.GITHUB_TOKEN }}

– it defaults to requiring 90% coverage, but you could pass in a different value by passing in a failedThreshold:

- name: Simplecov Report

uses: aki77/simplecov-report-action@v1

with:

failedThreshold: 80

token: ${{ secrets.GITHUB_TOKEN }}

and of course if we want it to run whether specs fail or not, we need to add if: always().

So, I added

- name: Simplecov Report

uses: aki77/simplecov-report-action@v1

with:

token: ${{ secrets.GITHUB_TOKEN }}

if: always()

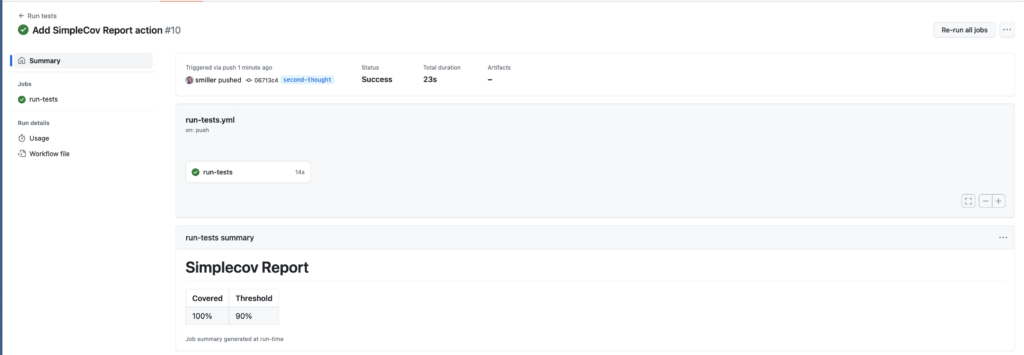

and pushed. That passed:

That said, as with the spec results, it would be useful to see more detail. I remembered a previous project where we’d uploaded the SimpleCov coverage report to GitHub so it showed up as among the artifacts, and the steps in Jeremy Kreutzbender’s 2019 article still work for that: we can add a fourth block to our file:

- name: Upload coverage results

uses: actions/upload-artifact@master

with:

name: coverage-report

path: coverage

if: always()

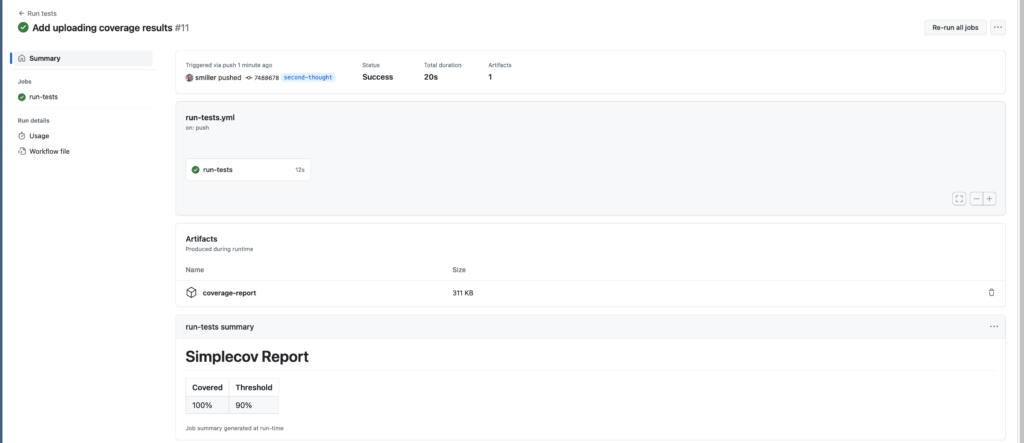

and push, and that gives us access to the coverage report:

which we can download and explore the details down to line numbers, even if our local machine is on a different branch.

And, for pushes, we’re done. But there’s two more things we need to add for it to work with pull requests as well.

For Pull Requests

The first thing to do is to modify the action file so that the we trigger the workflow on pull requests as well as pushes:

on: [push, pull_request]

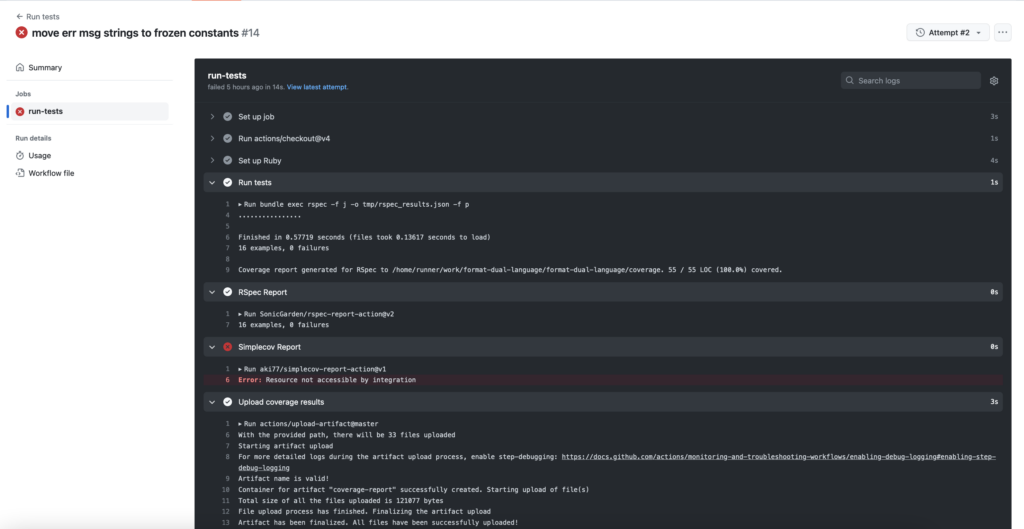

At which point, it feels like everything should work, but if we create a PR, the SimpleCov Report fails, with Error: Resource not accessible by integration:

It ran the code coverage, as we can see from the end of the Run tests block, it uploads the coverage results, but the SimpleCov Report block fails.

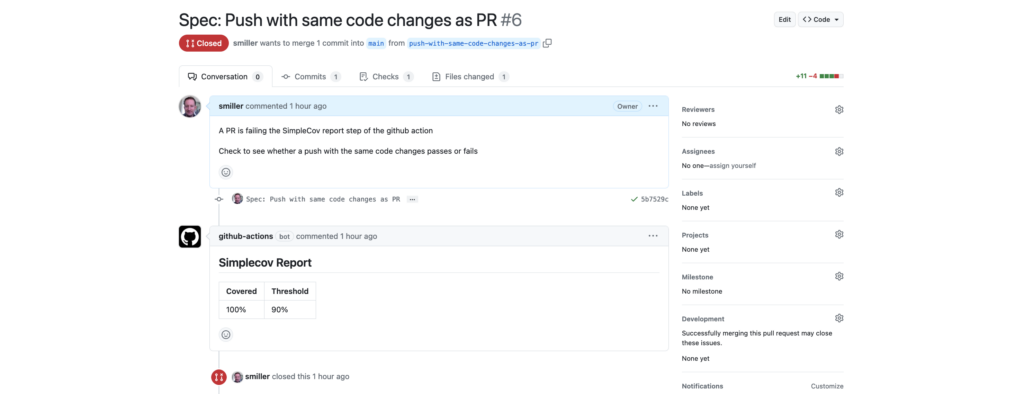

This is because, in the PR case, the SimpleCov Report tries to write a comment to the PR with its coverage report:

and to enable it to do that, we need to give it permission, so we need to insert into the action file:

permissions:

contents: read

pull-requests: write

And that fixes the SimpleCov Report error and gets us to a passing run.

I was initially unwilling to do this because pull-requests: write felt like a dangerously wide-scoped permission to leave open, but, on closer examination, the actual permissions that it enables are much more narrowly scoped, to issues and comments and labels and suchlike.

(Note that we only need to add these permissions because SimpleCov Report is adding a comment to the PR: if we’d picked a different SimpleCov reporting action that didn’t write a comment, we would only have needed on: [push, pull_request] to get the action to work on pushes and pull requests.)

This was an interesting gotcha to track down, but now we’re done, for both pushes and pull requests.

With thanks to my friend Ian, who unexpectedly submitted a pull request for the repo which exposed the additional wrinkles that had to be investigated here.

Appendix: Digressions on the Repo

#1: RSpec

The first time I ran the RSpec action it failed, which usefully revealed that the setup in one of my tests was relying on a manual step.

The repo takes an input file and writes an output file, so in spec/ I’ve got spec/example_files for the files it starts from and spec/tmp_files for the new files it writes. I had created that directory locally and was running

before do

FileUtils.rm_r("spec/tmp_files")

FileUtils.mkdir("spec/tmp_files")

end

Which only worked, of course, because before the first run I’d manually added the directory, which I didn’t remember until the GitHub action tried and failed because it had no idea about that manual step.

The simplest fix would be to replace it with

before do

FileUtils.mkdir("spec/tmp_files")

end

# ... tests

after do

FileUtils.rm_r("spec/tmp_files")

end

but because I was using the specs to drive the implementation and I was eyeballing the produced files as I went, I didn’t want to delete them in teardown, so I did this instead:

before do

FileUtils.rm_r("spec/tmp_files") if Dir.exist?("spec/tmp_files")

FileUtils.mkdir("spec/tmp_files")

end

after which both the local and the GitHub action versions of the tests passed. This discovery was an unexpected but very welcome benefit of setting up the GitHub action to run specs remotely.

#2: SimpleCov

To add SimpleCov locally, we add gem "simplecov" to the Gemfile, require and start it from the spec/spec_helper.rb file (filtering out spec files from the report):

require 'simplecov'

SimpleCov.start do

add_filter "/spec/"

end

and add coverage/ to the .gitignore file so we aren’t committing or pushing the files from local coverage runs.

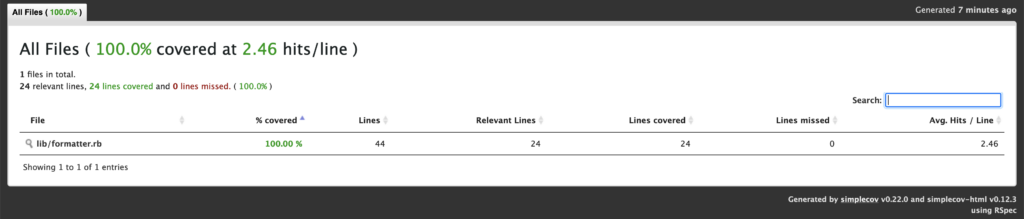

Then we can run RSpec locally, open the coverage/index.html file, and discover that though we know that both lib/ files are being exercised in the specs, only one of them shows up in the coverage report:

And this makes sense, unfortunately, because lib/formatter.rb contains the class which does the formatting, and lib/reformat.rb is a script for the command-line interface which is largely optparse code and param checking. But it makes for a misleading coverage report.

We can start fixing this by moving everything but the command-line interface and optparse code out of the lib/reformat.rb into, say, lib/processor.rb, and have the CLI call that. It still won’t show up in the coverage report because it isn’t being tested directly, but we can add tests against Processor directly so that they do.

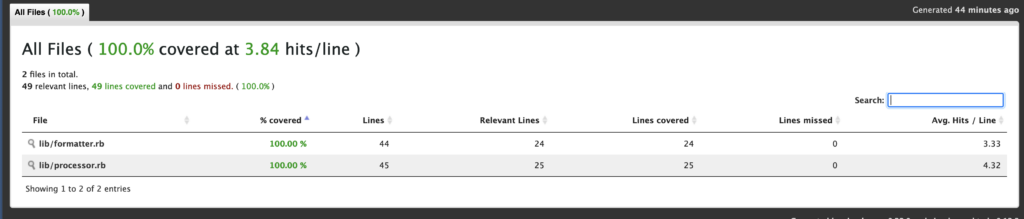

Having done that, we get a much more satisfactory coverage report:

This still leaves a very small bit of code in the CLI that isn’t covered by the coverage report:

option_parser.parse!

variant = ARGV[0]

if (variant.nil? && options.empty?) || variant == "help"

puts option_parser

end

if variant == "alternating"

Processor.new(options: options).process

end

but we have tests that will break if that doesn’t work so we decide we can live with that. (If it got more complicated, with multiple variants calling multiple processors, we could pull lines 4-10 into their own class and test it directly too. But it hasn’t yet.)

Sean Miller: The Wandering Coder

Sean Miller: The Wandering Coder